Lets face it -- π sucks. Not pie, pie is genuinely awesome and so is the fact that "pie" sounds like "π", so we can have fun with things like this. But seriously... π? It's totally off by a factor of 2!

For more on τ day, check out the Tau Manifest at http://tauday.com/ and of course http://halftauday.com/ if you're still a fan of π.

Showing posts with label mathematics. Show all posts

Showing posts with label mathematics. Show all posts

Knotty Doodles

By

Paul

on

Wednesday, December 8, 2010 at 9:12 AM

Labels: art, educational, entertainment, mathematics

Labels: art, educational, entertainment, mathematics

Talk on The Math, Physics of Drag Racing

By

Paul

on

Friday, October 29, 2010 at 5:00 PM

Labels: academic, communicating science, educational, entertainment, mathematics, noteworthy people, physics, science basics

Labels: academic, communicating science, educational, entertainment, mathematics, noteworthy people, physics, science basics

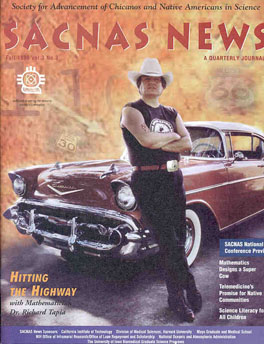

Thursday (4 Nov) there is a public lecture at COSI in Columbus you don't want to miss. The talk will be given by Dr. Richard A. Tapia -- a big name in applied mathematics, an entertaining speaker, and long-time "champion of under-represented minorities in the sciences."

Tapia has received numerous professional and community service honors and awards including the annual Blackwell-Tapia Conference being named in his honor (his reason for visiting Columbus) and being inducted into the Texas Science Hall of Fame (yes, such a thing really does exist!).

Here are the details of his talk from the event flier (PDF):

More about Dr. Tapia can be found here, here, and here. More on the Blackwell-Tapia Conference can be found by clicking the "Blackwell-Tapia" link on this website.

Tapia has received numerous professional and community service honors and awards including the annual Blackwell-Tapia Conference being named in his honor (his reason for visiting Columbus) and being inducted into the Texas Science Hall of Fame (yes, such a thing really does exist!).

Here are the details of his talk from the event flier (PDF):

Math at Top Speed: Exploring and Breaking Myths in the Drag Racing Folklore

November 4, 2010; 7:00pm @ COSI (doors open @ 6:00pm) Admission is free

For most of his life, Richard Tapia has been involved in some aspect of drag racing. He has witnessed the birth and growth of many myths concerning dragster speed and acceleration. Some of these myths will be explained and validated in this talk, while others will be destroyed. For example, Dr. Tapia will explain why dragster acceleration can be greater than the acceleration due to gravity, an age-old inconsistency, and he will present his Fundamental Theorem of Drag Racing. Part of this talk will be a historical account of the development of drag racing and several lively videos will accompany this discussion.

Speaker: Richard Tapia

University Professor Maxfield-Oshman Professor in Engineering, Department of Computational and Applied Mathematics (CAAM), Rice University

More about Dr. Tapia can be found here, here, and here. More on the Blackwell-Tapia Conference can be found by clicking the "Blackwell-Tapia" link on this website.

Free Online Math Books?

By

Paul

on

Tuesday, October 5, 2010 at 7:48 PM

Labels: education, math/science computation, mathematical biology, mathematics

Labels: education, math/science computation, mathematical biology, mathematics

I was poking around the web for a copy of Euclid's Elements, and came across a nice list of over 75 freely available online math books. There's a good mix of material there, ranging from centuries old classics up to modern day course topics and modern application areas - something for everybody. Check it out!

More music you¹ can do math to...

Apparently, Pandora insists I get at least one kick-ass song a day in which G. W. Bush is quote mined about war. So far, so good.

Math & The Oh-So-Musical Ministry

While working on a proof just now, this little beauty came pouring through my headphones. Great beat for doing math to, hilarious quote mining... what's not to love!?

Elements of Math, by Steven Strogatz

By

Paul

on

Thursday, July 8, 2010 at 12:19 AM

Labels: education, mathematics, noteworthy people, science basics, science literacy

Labels: education, mathematics, noteworthy people, science basics, science literacy

Courtesy of the editors at the New York Times Opinionator blog:

Go check it out! Strogatz is an excellent speaker and writer, and any minute spent reading his writing is a minute very well spent.Professor Strogatz’s 15-part series on mathematics, which ran from late January through early May, is available on the “Steven Strogatz on the Elements of Math” page.

Math Tricks!

I love math tricks, especially those that artfully illustrate any deeper meaning behind whatever rearrangement of symbols is at hand. I'm smack in the middle of thesis work at the moment, so instead of writing this one up, I'd love to hear your explanation of what's going on in the video below!

Is there a method to this madness?

Is there a method to this madness?

Dividing by zero

A friend of mine requested I do a post about diving by zero... and recalling this image I just couldn't resist!

While mathematicians publicly cringe at the idea of such divisions, we don't really worry too much about them in practice... they're easy enough to avoid and when the problem does arise, well -- we have our tricks!

So what happens when you divide by zero? Broadly speaking, a few things can happen. No, the universe won't explode or anything like that, although you can do fun tricks like "prove" that 1=2 (see below).

What really happens -- if you're just dividing a number by zero -- is the outcome is undefined. Not a number. No solution. It's nonsense. Nothing interesting happens. But suppose you divide by almost zero? Then what? Can we learn anything about these "almost zero" cases?

It turns out we can, and the mathematical tools used to do so turn out to be fundamental to our ability to use math to understand real-world processes. To understand all this, however, we need to begin by thinking about such divisions in the context of functions.

Suppose you have two functions f(x) and g(x) -- that is, two curves like the ones shown above -- and you make a new curve h(x) = g(x)/f(x) that is their ratio. If the denominator f(x) is somewhere equal to zero -- in our example, at x=0 and x=1 -- then what happens for nearby values of x?

At this point, we're doing homework straight out of a first course in Calculus. To do this properly, we'd draw on one of the most useful tools in mathematics -- the notion of limits -- which allow us to formalize some otherwise intuitive ideas and develop a ton of really useful tools for understanding more complicated looking functions (tools like, oh, say... all of calculus).

Without going into the mathematical details, these allow us to obtain precise (and often very general) statements similar to the following statements about our function h(x).

First, we know h(x) is undefined at x=0 and x=1. If we ask what happens to values of h(x) close to x=0, we begin to learn something more about h(x) which, in some applications might be the answer to an important question. Here, lets take a look at our toy example as define above.

Graphically, we can see that as x nears 0 from either direction, h(x) nears negative infinity. In this case, we'd say the limit as x goes to zero is negative infinity. On the other hand, as we consider x values near 1 we see that h(x) either runs off to negative infinity (x<1) or positive infinity (x>1). In this case, as the limit goes two different directions depending on whether you approach x=1 from above or below. In this case we say that at x=1, the limit does not exist. The usual pre-calculus questions about limits of (mostly) continuous functions.

So what does any of this have to do with dividing by zero? After all, graphically this all seems a bit pointless!

It turns out that the problem of dividing by zero is the comical counterpart to some of the most powerful and ubiquitous tools in mathematics. The same properties of limits used to derive results like those above (from equations, instead of graphs) are the same concepts that provide a foundation for scientific theories in engineering, statistics, biology, chemistry, physics, and medicine.

A great place to build a foundation for understanding and using those tools is with a little bit of calculus, probability and statistics - any one of which would require more than another post or two, but might be well worth doing.

Until then, if you'd like a little calculus refresher there are a handful of free resources online including those here, here and here (all three of which are reviewed here).

As always, suggestions and requests can be shared via email or in the comments below.

R code for the figures above:

While mathematicians publicly cringe at the idea of such divisions, we don't really worry too much about them in practice... they're easy enough to avoid and when the problem does arise, well -- we have our tricks!

So what happens when you divide by zero? Broadly speaking, a few things can happen. No, the universe won't explode or anything like that, although you can do fun tricks like "prove" that 1=2 (see below).

What really happens -- if you're just dividing a number by zero -- is the outcome is undefined. Not a number. No solution. It's nonsense. Nothing interesting happens. But suppose you divide by almost zero? Then what? Can we learn anything about these "almost zero" cases?

It turns out we can, and the mathematical tools used to do so turn out to be fundamental to our ability to use math to understand real-world processes. To understand all this, however, we need to begin by thinking about such divisions in the context of functions.

Suppose you have two functions f(x) and g(x) -- that is, two curves like the ones shown above -- and you make a new curve h(x) = g(x)/f(x) that is their ratio. If the denominator f(x) is somewhere equal to zero -- in our example, at x=0 and x=1 -- then what happens for nearby values of x?

At this point, we're doing homework straight out of a first course in Calculus. To do this properly, we'd draw on one of the most useful tools in mathematics -- the notion of limits -- which allow us to formalize some otherwise intuitive ideas and develop a ton of really useful tools for understanding more complicated looking functions (tools like, oh, say... all of calculus).

Without going into the mathematical details, these allow us to obtain precise (and often very general) statements similar to the following statements about our function h(x).

First, we know h(x) is undefined at x=0 and x=1. If we ask what happens to values of h(x) close to x=0, we begin to learn something more about h(x) which, in some applications might be the answer to an important question. Here, lets take a look at our toy example as define above.

Graphically, we can see that as x nears 0 from either direction, h(x) nears negative infinity. In this case, we'd say the limit as x goes to zero is negative infinity. On the other hand, as we consider x values near 1 we see that h(x) either runs off to negative infinity (x<1) or positive infinity (x>1). In this case, as the limit goes two different directions depending on whether you approach x=1 from above or below. In this case we say that at x=1, the limit does not exist. The usual pre-calculus questions about limits of (mostly) continuous functions.

So what does any of this have to do with dividing by zero? After all, graphically this all seems a bit pointless!

It turns out that the problem of dividing by zero is the comical counterpart to some of the most powerful and ubiquitous tools in mathematics. The same properties of limits used to derive results like those above (from equations, instead of graphs) are the same concepts that provide a foundation for scientific theories in engineering, statistics, biology, chemistry, physics, and medicine.

A great place to build a foundation for understanding and using those tools is with a little bit of calculus, probability and statistics - any one of which would require more than another post or two, but might be well worth doing.

Until then, if you'd like a little calculus refresher there are a handful of free resources online including those here, here and here (all three of which are reviewed here).

As always, suggestions and requests can be shared via email or in the comments below.

R code for the figures above:

How big is a billion? Well... it depends.

Over at the (very awesome) blog Bad Astronomy, Phil Plait ponders the enormity of a billion, citing an image made up of a grid of dots from this post at Tomorrow is Another Jay. Here's the image...

All this made me wonder about the issue of dimensionality in trying to understand "a billion". Let me explain...

Previously, I'd come up with my own way of thinking about "1 billion years" in terms of distances. This was all in the context of thinking about "deep time" when it comes up in geology and evolution (that post is here). After all, today, people get to travel quite large distances pretty easily, yet we can also appreciate the idea of small distances like centimeters and millimeters.

That's how the question of dimensionality came up. The grid above is a two-dimensional grid. In contrast, my distance example is a one-dimensional illustration of "a billion". So does dimensionality matter? Does it matter whether we're thinking about "a billion" in terms of a length, an area or a volume?

I haven't quite worked out an answer yet, but I think it does. To illustrate this fact,

All this made me wonder about the issue of dimensionality in trying to understand "a billion". Let me explain...

Previously, I'd come up with my own way of thinking about "1 billion years" in terms of distances. This was all in the context of thinking about "deep time" when it comes up in geology and evolution (that post is here). After all, today, people get to travel quite large distances pretty easily, yet we can also appreciate the idea of small distances like centimeters and millimeters.

That's how the question of dimensionality came up. The grid above is a two-dimensional grid. In contrast, my distance example is a one-dimensional illustration of "a billion". So does dimensionality matter? Does it matter whether we're thinking about "a billion" in terms of a length, an area or a volume?

I haven't quite worked out an answer yet, but I think it does. To illustrate this fact,

Math, Computers and Intelligent Design Pseudoscience

By

Paul

on

Friday, August 21, 2009 at 12:53 PM

Labels: flawed argument, intelligent design (creationism), math/science computation, mathematics

Labels: flawed argument, intelligent design (creationism), math/science computation, mathematics

Here's a recently published paper (PDF reprint) by intelligent design (creationism) proponent William Dembski. Strong criticisms of the paper (and how it's being misused by other intelligent design creationism proponents) have already popped up on blogs in some posts like these, and even in Dembski's own blog - which he promptly put a stop to by disabling comments.

Instead of focusing on the paper itself, I wanted to illustrate how it (and other mathematical or computational results) can be misused in promoting ID. Before I begin, feel free to read through the blog posts and skim the paper.

After that, we can start in on this blurb from the Discovery Institute as an example of this sort of misuse by asking "So does this paper support intelligent design creationism??"

The paper itself specifies (in the abstract) what they did, and how they applied it (here and below, I have used a bold font for emphasis):

While the paper says nothing about intelligent design creationism, others (including Dembski himself) claim that it applies. Lets start with the title of the Discovery Institute piece. First, they claim this is a pro-ID publication. Second, we have our first logical fallacy: the appeal to authority. It seems that the holy grail of ID creationist efforts is to have some science-cred to wave around, and a "peer-reviewed scientific article" is exactly that. So what about the pro-ID claim?

In this blog post we get the Discovery Institute's take on what the paper is really about:

Unfortunately, intelligent design creationists frequently misuse or improperly apply the concept of "information" (which can be defined in a number of ways). Demanding a clear definition is always a good way to keep on track with what's actually being said.

Here's the closest we come to definitions for interpreting information in this paper:

arguments fallacies whereby (in a debate context) you misrepresent your opponents position with something you can refute, then pretend you refuted your opponent's true position, and then assert that their being wrong makes your position correct.

Here's the Discovery Institute spinning the article against evolution "unguided" by an intelligent designer:

Just to clarify the staw man, evolution by natural selection is equated with evolutionary algorithms, which are then criticized and it's all put forth as being "pro-ID".

So far, I don't see how any of this supports intelligent design creationism or refutes evolution by natural selection. Feel free to correct me if I'm wrong here!

So what's really the take home message from this paper?

Returning to the matter of equivocation, MarkCC's blog post helps to clarify the meaning of "information" in the paper, although only implicitly:

The conclusions of the paper seem to be awkwardly stated and kind of amusing:

This section also has some either very ironic or very well chosen wording...

To be honest, I have no real expertise in algorithms, but from what I could dig up I don't think this use of "integrity" means anything out of the ordinary. I'll run this by some computer science friends of mine and any insights will appear below.

Until then, my best response to the question "So does this paper support intelligent design creationism??" is decidedly, No.

Instead of focusing on the paper itself, I wanted to illustrate how it (and other mathematical or computational results) can be misused in promoting ID. Before I begin, feel free to read through the blog posts and skim the paper.

After that, we can start in on this blurb from the Discovery Institute as an example of this sort of misuse by asking "So does this paper support intelligent design creationism??"

The paper itself specifies (in the abstract) what they did, and how they applied it (here and below, I have used a bold font for emphasis):

This paper develops a methodology based on these information measures to gauge the effectiveness with which problem-specific information facilitates successful search. It then applies this methodology to various search tools widely used in evolutionary search.I happen to know a thing or two about using mathematical models in science, and this paper is a fantastic example of what I consider mathematical equivocation - using the power and complexities of mathematics as a logical tool to try and back a claim that really isn't backed by the math. It's significant because, unlike typical rhetorical arguments, the math obfuscates the assumptions and logical arguments being made, and can at times require graduate level background to decipher - so the equivocation is a bit harder (if not impossible, for some) to actually notice.

While the paper says nothing about intelligent design creationism, others (including Dembski himself) claim that it applies. Lets start with the title of the Discovery Institute piece. First, they claim this is a pro-ID publication. Second, we have our first logical fallacy: the appeal to authority. It seems that the holy grail of ID creationist efforts is to have some science-cred to wave around, and a "peer-reviewed scientific article" is exactly that. So what about the pro-ID claim?

In this blog post we get the Discovery Institute's take on what the paper is really about:

A new article titled "Conservation of Information in Search: Measuring the Cost of Success," in the journal IEEE Transactions on Systems, Man and Cybernetics A: Systems & Humans by William A. Dembski and Robert J. Marks II uses computer simulations and information theory to challenge the ability of Darwinian processes to create new functional genetic information.To understand why the paper has absolutely nothing to do with real functional genetic information (despite this claim), requires knowing about information theory (an unknown topic to the vast majority of people, scientists included) and that the kind of "information" discussed in the paper is very different from genetic information in a biological context. Indeed, the word genetic only appears on the first page of the article when mentioning "genetic algorithms", and there's no mention of "functional genetic" anything in the paper.

Unfortunately, intelligent design creationists frequently misuse or improperly apply the concept of "information" (which can be defined in a number of ways). Demanding a clear definition is always a good way to keep on track with what's actually being said.

Here's the closest we come to definitions for interpreting information in this paper:

- endogenous information, which measures the difficulty of finding a target using random search;

- exogenous information, which measures the difficulty that remains in finding a target once a search takes advantage of problem specific information; and

- active information, which, as the difference between endogenous and exogenous information, measures the contribution of problem-specific information for successfully finding a target.

Here's the Discovery Institute spinning the article against evolution "unguided" by an intelligent designer:

Darwinian evolution is, at [it's] heart, a search algorithm that uses a trial and error process of random mutation and unguided natural selection to find genotypes (i.e. DNA sequences) that lead to phenotypes (i.e. biomolecules and body plans) that have high fitness (i.e. foster survival and reproduction). Dembski and Marks' article explains that unless you start off with some information indicating where peaks in a fitness landscape may lie, any search — including a Darwinian one — is on average no better than a random search.Note that the implication (at least to me) is that evolution requires "some information" (e.g. an intelligent designer?) if it's going to work "better than a random search." Also note the false dichotomy at play here, as the blog post seems to imply that it's a "pro-ID" publication because it allegedly refutes evolution.

Just to clarify the staw man, evolution by natural selection is equated with evolutionary algorithms, which are then criticized and it's all put forth as being "pro-ID".

So far, I don't see how any of this supports intelligent design creationism or refutes evolution by natural selection. Feel free to correct me if I'm wrong here!

So what's really the take home message from this paper?

Returning to the matter of equivocation, MarkCC's blog post helps to clarify the meaning of "information" in the paper, although only implicitly:

In terms of information theory, you can look at a search algorithm and how it's shaped for its search space, and describe how much information is contained in the search algorithm about the structure of the space it's going to search.In terms of evolution, it's really just asking how much of the information about the space (i.e. the fitness landscape) is tied up in the algorithm (i.e. natural selection), which given that natural selection is all about the relative number of offspring contributed to the next generation, these results really don't seem surprising or problematic.

What D&M do in this paper is work out a formalism for doing that - for quantifying the amount of information encoded in a search algorithm, and then show how it applies to a series of different kinds of search algorithms.

The conclusions of the paper seem to be awkwardly stated and kind of amusing:

Hmm... So in order for evolution by natural selection (as an algorithm) to work better than random, it needs to include correct information from genetics, developmental biology, ecology, and so on? Got it. If we get it wrong, it'll perform worse than some other random hypotheses? Got it.CONCLUSIONEndogenous information represents the inherent difficulty of a search problem in relation to a random-search baseline. If any search algorithm is to perform better than random search, active information must be resident. If the active information is inaccurate (negative), the search can perform worse than random...

This section also has some either very ironic or very well chosen wording...

... Accordingly, attempts to characterize evolutionary algorithms as creators ofNice - "attempts to characterize" are inappropriate because we're not using your particular definition of information? Gee, now THAT sounds familiar.

novel information are inappropriate. To have integrity, search algorithms, particularly computer simulations of evolutionary search, should explicitly state as follows: 1) a numerical measure of the difficulty of the problem to be solved, i.e., the endogenous information, and 2) a numerical measure of the amount of problem-specific information resident in the search algorithm, i.e., the active information.

To be honest, I have no real expertise in algorithms, but from what I could dig up I don't think this use of "integrity" means anything out of the ordinary. I'll run this by some computer science friends of mine and any insights will appear below.

Until then, my best response to the question "So does this paper support intelligent design creationism??" is decidedly, No.

How old is the earth?

By

Paul

on

Friday, April 3, 2009 at 10:09 PM

Labels: earth, education, evolution, mathematics, origins

Labels: earth, education, evolution, mathematics, origins

I've recently been trying to get a better grasp on the geologic time scale, and thought others might like to share in pondering what some of those gigantic numbers really mean. To do this, we'll do a comparison with the one thing we have some experience with that spans this huge range of numbers: distance.

So what kind of numbers are we talking about here? Lets start way back to the beginning when this little rock called home (ok, or "Earth") came into existence. This happened roughly 4,500,000,000 years ago - or typing a few less zeros, 4.5 billion years ago.

Not quite sure how big 4.5 billion is? Consider the following: suppose a millimeter represents 1 year. That makes me "3cm old" since 1cm = 10yrs, and I'm almost 30 years old.

Continuing this line of reasoning (using the metric system, just to keep the math easy), we can get an idea of some larger numbers of years: 1 meter = 1000 yrs, 1 kilometer = 1 million yrs, and so on.

Next, pick your favorite reference points and see how big the distances get! How long ago was U.S. Declaration of Independence signed? About 20cm (or 8 inches). The Black Death that swept through 14th century Europe? 0.7 meters. The fall of the Roman Empire? 1.5 meters. Jesus? 2 meters. The age of the earth according to young earth creationists? About 10 meters. The last "ice age" in North America? About 13 meters. When did dinosaurs go extinct? About 65 kilometers (5 miles is about 8 km). And so on...

So with this time-distance comparison in hand, lets start back at the beginning.

That 4.5 billion years works out to 4,500 km (about 2,700 miles). The circumference of the earth is about 40,000 km (24,900 miles) you can think of this distance as about 1/10th the way around the world - say, from southern California to northern Maine, or roughly from New York to southern Florida and back.

U.S. map showing what 4.5 billion, 3.5 billion & 2 billion years

look if you equate 1 year = 1 millimeter. Click to enlarge.

Think about it - all adult humans are only a few centimeters old while the history of the earth spans comparatively enormous continental distances (see the figure above)!

With that, I'll leave you to ponder some other major milestones in the history of our little blue planet:

So what kind of numbers are we talking about here? Lets start way back to the beginning when this little rock called home (ok, or "Earth") came into existence. This happened roughly 4,500,000,000 years ago - or typing a few less zeros, 4.5 billion years ago.

Not quite sure how big 4.5 billion is? Consider the following: suppose a millimeter represents 1 year. That makes me "3cm old" since 1cm = 10yrs, and I'm almost 30 years old.

Continuing this line of reasoning (using the metric system, just to keep the math easy), we can get an idea of some larger numbers of years: 1 meter = 1000 yrs, 1 kilometer = 1 million yrs, and so on.

Next, pick your favorite reference points and see how big the distances get! How long ago was U.S. Declaration of Independence signed? About 20cm (or 8 inches). The Black Death that swept through 14th century Europe? 0.7 meters. The fall of the Roman Empire? 1.5 meters. Jesus? 2 meters. The age of the earth according to young earth creationists? About 10 meters. The last "ice age" in North America? About 13 meters. When did dinosaurs go extinct? About 65 kilometers (5 miles is about 8 km). And so on...

So with this time-distance comparison in hand, lets start back at the beginning.

That 4.5 billion years works out to 4,500 km (about 2,700 miles). The circumference of the earth is about 40,000 km (24,900 miles) you can think of this distance as about 1/10th the way around the world - say, from southern California to northern Maine, or roughly from New York to southern Florida and back.

U.S. map showing what 4.5 billion, 3.5 billion & 2 billion years

look if you equate 1 year = 1 millimeter. Click to enlarge.

Think about it - all adult humans are only a few centimeters old while the history of the earth spans comparatively enormous continental distances (see the figure above)!

With that, I'll leave you to ponder some other major milestones in the history of our little blue planet:

- Life appeared about 1 billion years after earth formed, with the oldest known evidence of life dating back to around 3,500,000,000 years ago or earlier (that's 3,500 km - roughly New York to the Grand Canyon in southern Utah).

- About 2,000,000,000 (yup, billion) years passed before multi-celled life appeared (This brings us to about the distance from Denver to Chicago - 1,500 km),

- and things didn't really get "interesting" until the famed Cambrian explosion around 530,000,000 years ago, when life diversified into the major groups we recognize today (for example, this is when we see the first plants, animals, etc.)

- During the next 50,000,000+ years (50 km or about 30 miles) organisms continue to diversify, though it takes around 300,000,000 more years for early mammals to arrive on the scene (yes, I skipped over a whole lot of invertebrates, fish, amphibians, and reptiles there), which brings us furry critters into existence a little over 200,000,000 years ago. The history of mammals' on earth (only 200 km /120 miles) is relatively quite recent!

- So what about humans? The first hominids appeared around 7,000,000 years ago (that's right, it took approximately 4.493 billion years since earth was formed 4.5 billion years ago before anything vaguely human came into existence - about 3.493 billion years after the first known evidence of life!) - as far as mammals go, 7 km out of 200 km is also quite recent, at least in my opinion.

- Finally, modern humans are thought to have appeared around 200,000+ years ago - a little over 200 meters ago!!!

Subscribe to:

Posts (Atom)